|

Samuel Schmidgall Hello there. My name is Samuel Schmidgall. I am a research scientist at Google Deepmind working on biomedical research as well as post-training for Gemini. I will recieve my PhD from Johns Hopkins University in Spring 2026. I was advised by Rama Chellappa and worked closely with Axel Krieger and Michael Moor. I also received support from the NSF Graduate Research fellowship (NSF GRFP). Email / LinkedIn / Scholar / Twitter | X / Github |

|

Research |

|

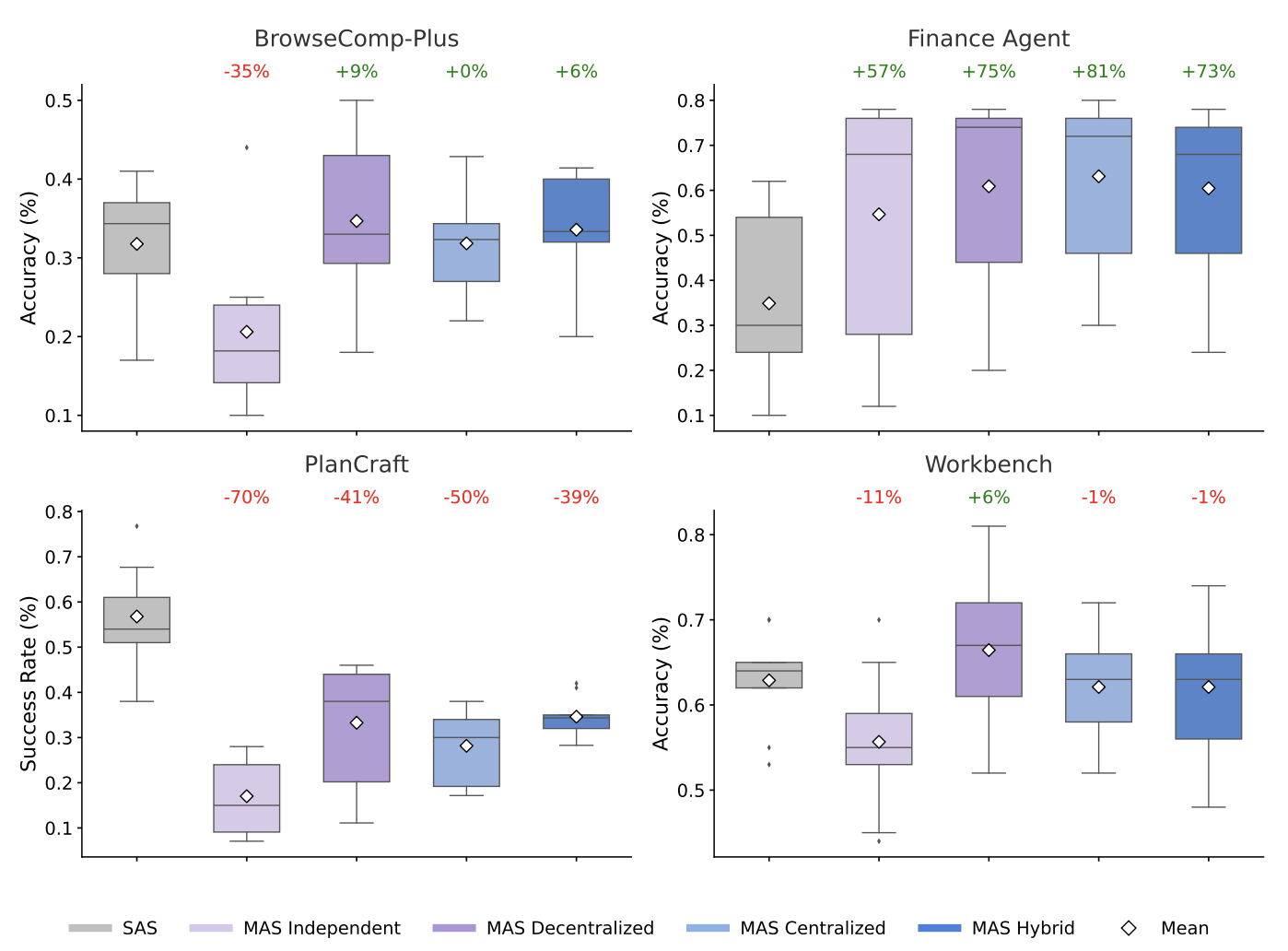

Towards a Science of Scaling Agent Systems

Yubin Kim, Ken Gu, Chanwoo Park, Chunjong Park, Samuel Schmidgall, A. Ali Heydari, Yao Yan, Zhihan Zhang, Yuchen Zhuang, Mark Malhotra, Paul Pu Liang, Hae Won Park, Yuzhe Yang, Xuhai Xu, Yilun Du, Shwetak Patel, Tim Althoff, Daniel McDuff and Xin Liu arXiv preprint arXiv:2512.08296, 2025 Agents, language model (LM)-based systems that are capable of reasoning, planning, and acting are becoming the dominant paradigm for real-world AI applications. Despite this widespread adoption, the principles that determine their performance remain underexplored, leaving practitioners to rely on heuristics rather than principled design choices. We address this gap by deriving quantitative scaling principles for agent systems. |

|

Current validation practice undermines surgical AI development

Annika Reinke, Ziying O Li, Minu D Tizabi, Pascaline André, Marcel Knopp, Mika M Rother, Ines P Machado, Maria S Altieri, ..., Duygu Sarikaya, Samuel Schmidgall, Matthias Seibold, ..., Amin Madani, Danail Stoyanov, Stefanie Speidel, Danail A Hashimoto, Fiona R Kolbinger, Lena Maier-Hein arXiv preprint arXiv:2511.03769, 2025 We introduce the first comprehensive catalog of validation pitfalls in AI-based surgical video analysis that was derived from a multi-stage Delphi process with 91 international experts. The collected pitfalls span three categories: (1) data (e.g., incomplete annotation, spurious correlations), (2) metric selection and configuration (e.g., neglect of temporal stability, mismatch with clinical needs), and (3) aggregation and reporting (e.g., clinically uninformative aggregation, failure to account for frame dependencies in hierarchical data structures). . |

|

MedGemma Technical Report

MedGemma Team at Google Research and Google DeepMind arXiv preprint arXiv:2507.05201, 2025 MedGemma is a collection of medical vision-language foundation models based on Gemma 3 4B and 27B. MedGemma demonstrates advanced medical understanding and reasoning on images and text, significantly exceeding the performance of similar-sized generative models and approaching the performance of task-specific models, while maintaining the general capabilities of the Gemma 3 base models. |

|

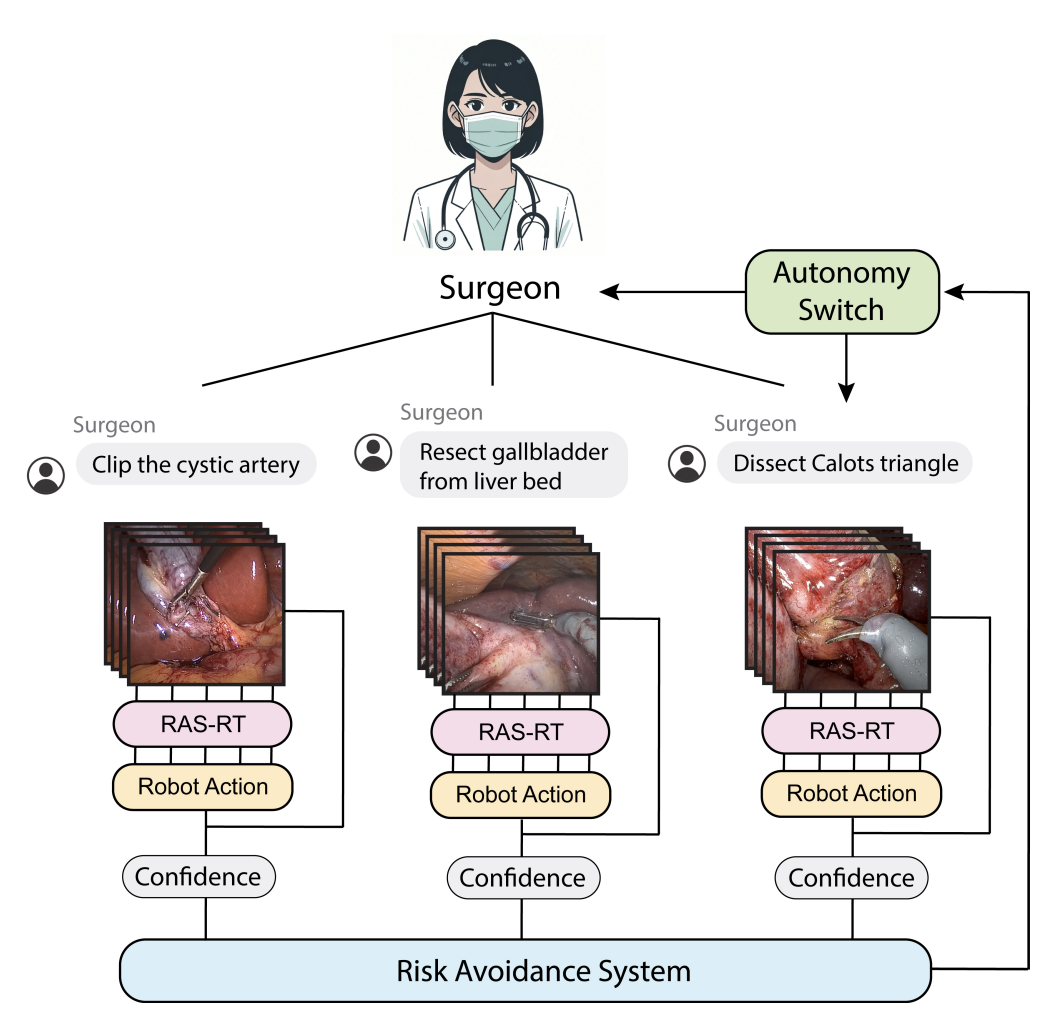

SRT-H: A Hierarchical Framework for Autonomous Surgery via Language Conditioned Imitation Learning

Ji Woong Kim, Juo-Tung Chen, Pascal Hansen, Lucy X. Shi, Antony Goldenberg, Samuel Schmidgall, Paul Maria Scheikl, Anton Deguet, Brandon M. White, De Ru Tsai, Richard Cha, Jeffrey Jopling, Chelsea Finn, Axel Krieger Science Robotics, 2025 SRT-H utilizes a high-level policy for task planning and a low-level policy for generating low-level trajectories. The high-level planner plans in language space, generating task or corrective instructions to guide the robot through the long-horizon steps and correct for the low-level policy's errors. We validate our framework through ex vivo experiments on cholecystectomy, a commonly-practiced minimally invasive procedure, and conduct ablation studies to evaluate key components of the system. Our method achieves a 100% success rate across n=8 different ex-vivo gallbladders, operating fully autonomously without human intervention. |

|

Will your next surgeon be a robot? Autonomy and AI

in robotic surgery

Samuel Schmidgall, Justin Opfermann, Ji Woong Kim, Axel Krieger Science Robotics, 2025 State-of-the-art surgery is performed robotically under direct surgeon control. However, surgical outcome is limited by the availability, skill, and day-to-day performance of the operating surgeon. What will it take to improve surgical outcomes independent of human limitations? In this review, we explore the technological evolution of robotic surgery and current trends in robotics and AI which could lead to a future generation of autonomous surgical robots that will outperform today’s tele-operated robots. |

|

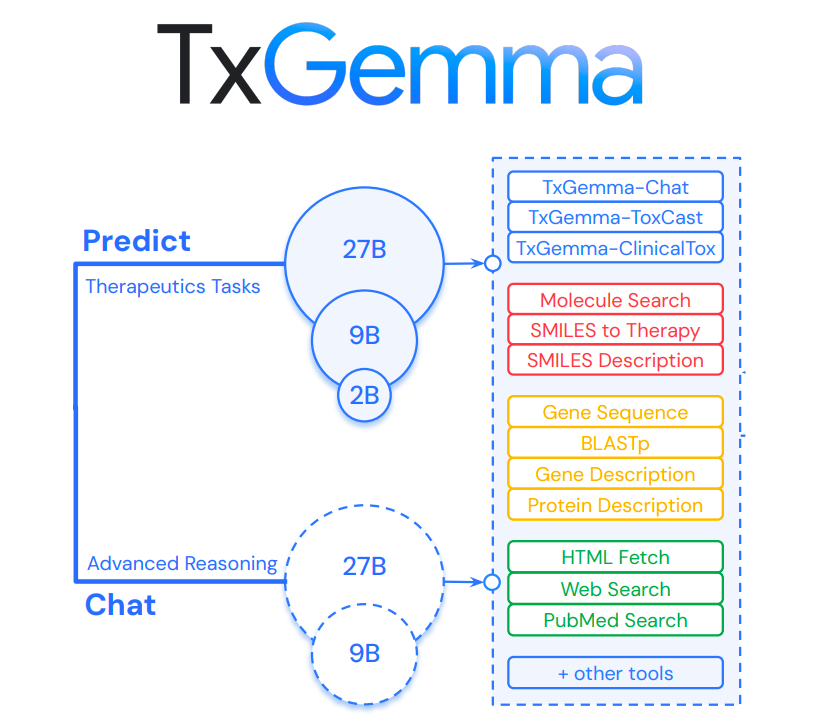

TxGemma: Efficient and Agentic LLMs for Therapeutics

Eric Wang*, Samuel Schmidgall*, Paul F. Jaeger, Fan Zhang, Rory Pilgrim, Yossi Matias, Joelle Barral, David Fleet, and Shekoofeh Azizi arXiv preprint arXiv:2504.06196, 2025 Here we introduce TxGemma, a suite of efficient, generalist large language models (LLMs) capable of therapeutic property prediction as well as interactive reasoning and explainability. Unlike task-specific models, TxGemma synthesizes information from diverse sources, enabling broad application across the therapeutic development pipeline. The suite includes 2B, 9B, and 27B parameter models, fine-tuned from Gemma-2 on a comprehensive dataset of small molecules, proteins, nucleic acids, diseases, and cell lines. |

|

AgentRxiv: Towards Collaborative Autonomous Research

Samuel Schmidgall, Michael Moor arXiv preprint arXiv:2503.18102, 2025 Here, we introduce AgentRxiv, a centralized preprint server designed specifically for autonomous research agents to overcome the limitations of isolated research outputs by enabling collaborative, cumulative knowledge sharing. We find that agents sharing research have substantial performance improvements when making discoveries. |

|

Agent Laboratory: Using LLM Agents as Research Assistants

Samuel Schmidgall, Yusheng Su, Ze Wang, Ximeng Sun, Jialian Wu, Xiaodong Yu, Jiang Liu, Michael Moor, Zicheng Liu, Emad Barsoum arXiv preprint arXiv:2503.18102, 2025 Here, we introduce Agent Laboratory, an open-source large language model (LLM) agent framework for accelerating the individual’s ability to perform research. Agent Laboratory takes a human-produced research idea as input and outputs a code repository and a research report. This is accomplished through specialized agents driven by LLMs that collaborate to perform research based on the human’s preference for the agent’s involvement. |

|

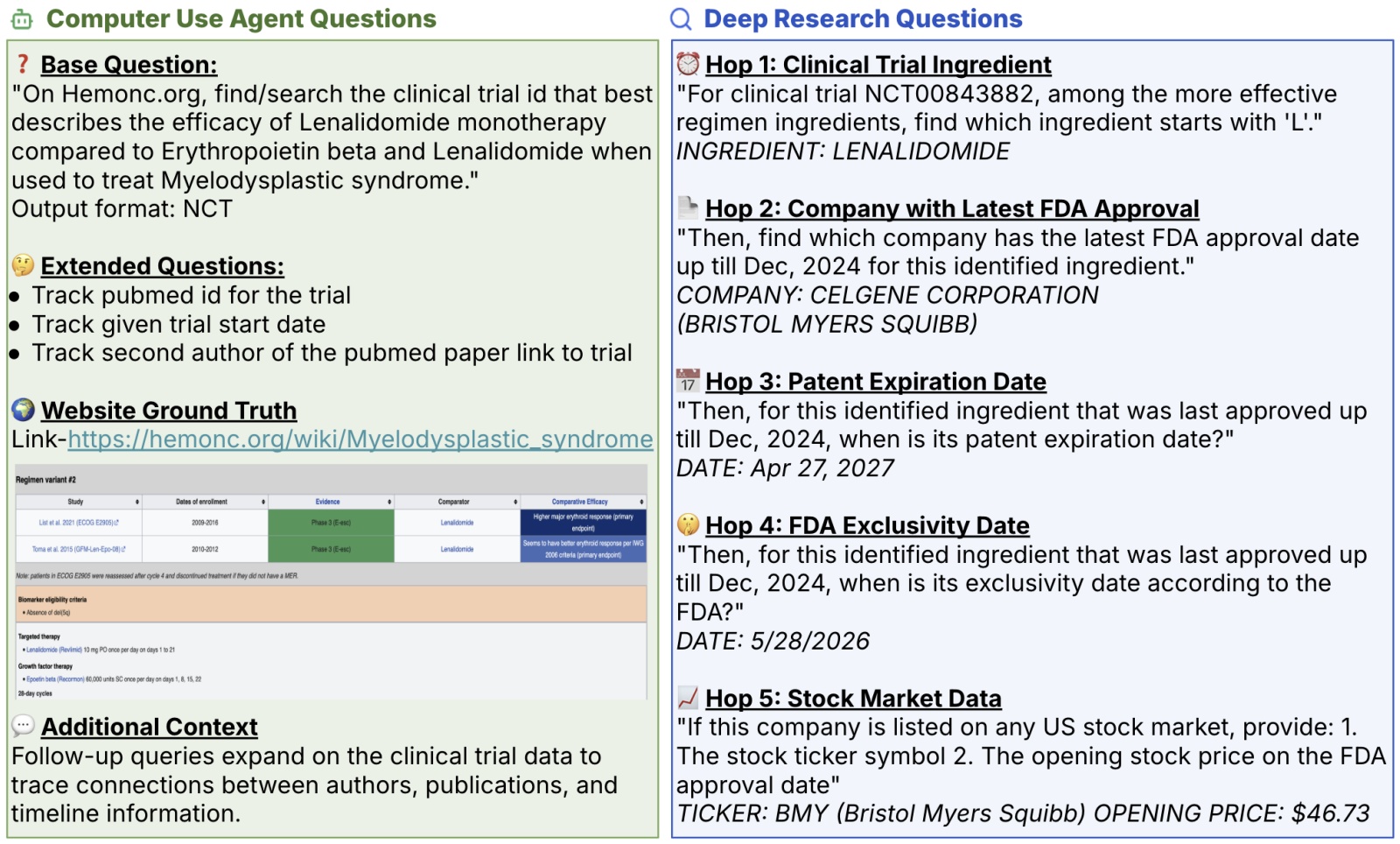

MedBrowseComp: Benchmarking Medical Deep Research and Computer Use

Shan Chen, Pedro Moreira, Yuxin Xiao, Samuel Schmidgall, Jeremy Warner, Hugo Aerts, Thomas Hartvigsen, Jack Gallifant, Danielle S. Bitterman arXiv preprint arXiv:2505.14963, 2025 MedBrowseComp is the first benchmark that systematically tests an agent's ability to reliably retrieve and synthesize multi-hop medical facts from live knowledge bases. MedBrowseComp contains more than 1,000 human-curated questions that mirror clinical scenarios where practitioners must reconcile fragmented or conflicting information to reach an up-to-date conclusion. |

|

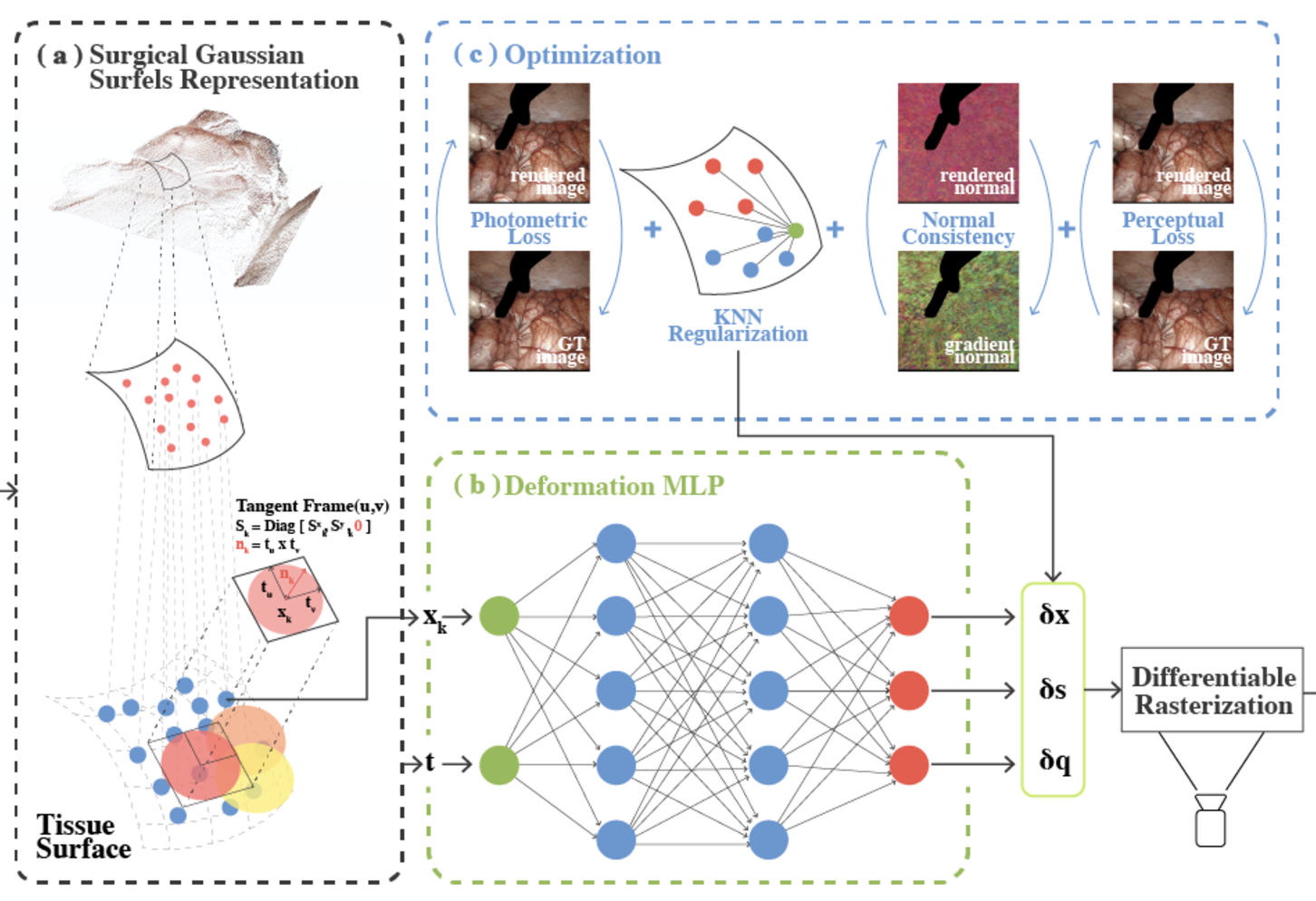

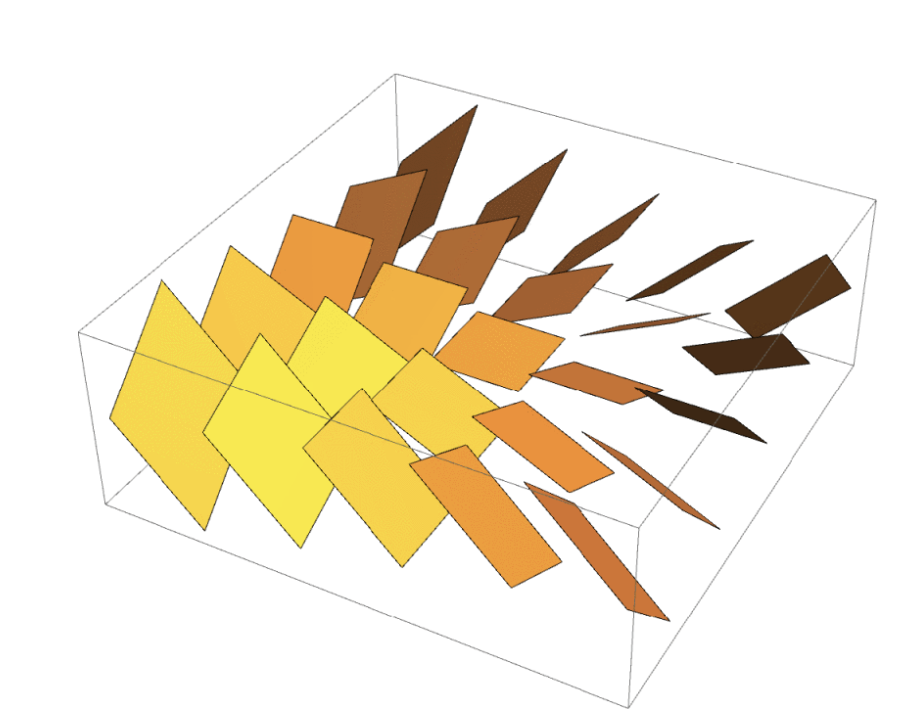

Surgical Gaussian Surfels: Highly Accurate Real-time Surgical Scene Rendering

Idris O. Sunmola, Zhenjun Zhao, Samuel Schmidgall, Yumeng Wang, Paul Maria Scheikl, Axel Krieger arXiv preprint arXiv:2503.04079, 2025 Surgical Gaussian Surfels (SGS) transforms anisotropic point primitives into surface-aligned elliptical splats by constraining the scale component of the Gaussian covariance matrix along the view-aligned axis. |

|

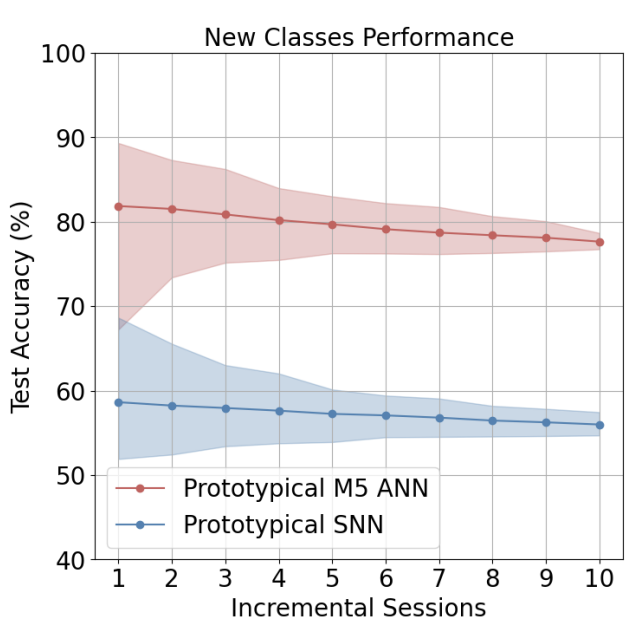

NeuroBench: A Framework for Benchmarking Neuromorphic Computing Algorithms and Systems

Jason Yik, Korneel Van den Berghe, Douwe den Blanken, Younes Bouhadjar, Maxime Fabre, Paul Hueber, Denis Kleyko, ..., Johannes Schemmel, Samuel Schmidgall, Catherine Schuman, ..., Marian Verhelst, Craig M. Vineyard, Bernhard Vogginger, Amirreza Yousefzadeh, Fatima Tuz Zohora, Charlotte Frenkel, Vijay Janapa Reddi Nature Communications, 2025 Neurobench is a collaborative effort of nearly 100 co-authors across over 50 institutions in industry and academia, aiming to provide a representative structure for standardizing the evaluation of neuromorphic approaches. |

|

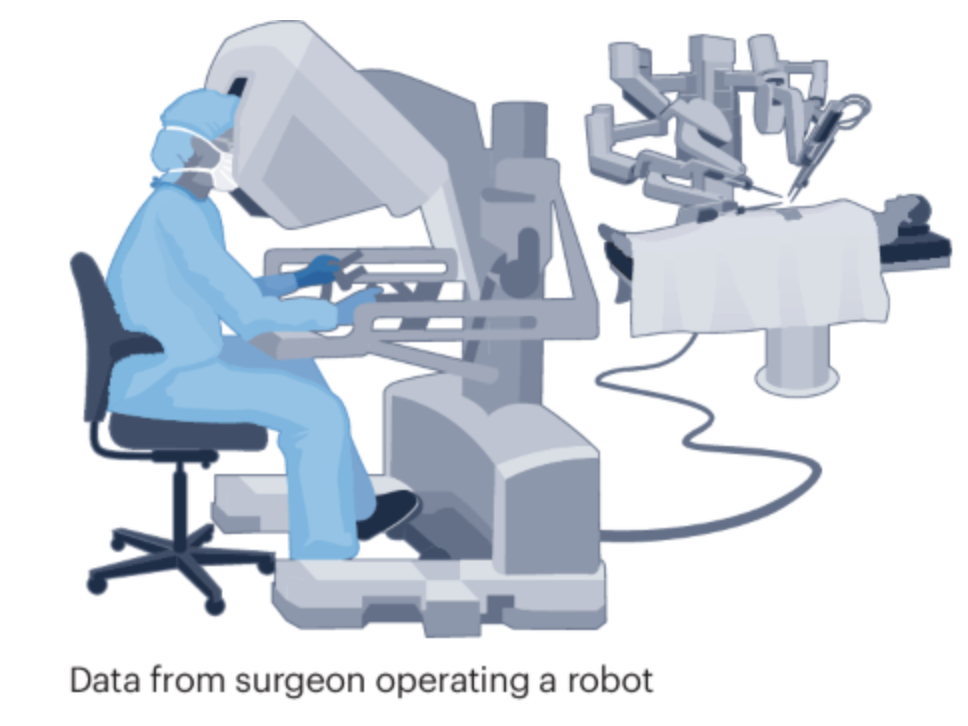

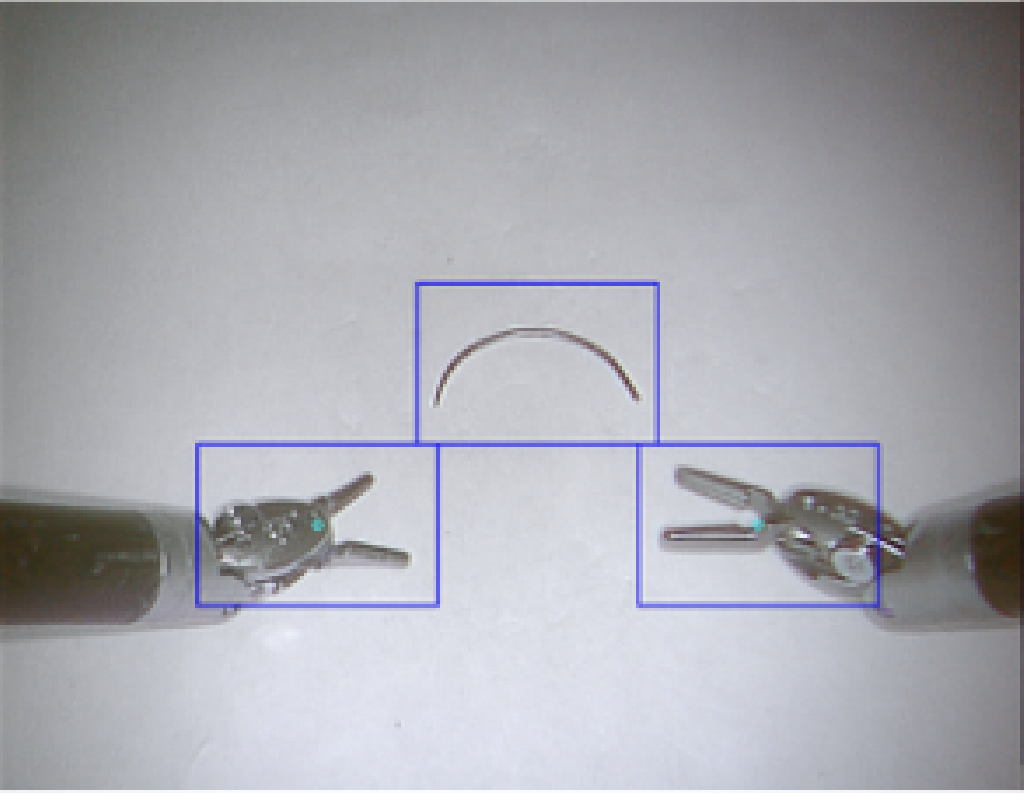

Surgical Robot Transformer (SRT): Imitation Learning for Surgical Subtasks

Idris O. Sunmola, Zhenjun Zhao, Samuel Schmidgall, Yumeng Wang, Paul Maria Scheikl, Axel Krieger arXiv preprint arXiv:2503.04079, 2025 Here, we introduce the Surgical Robot Transformer (SRT). We explore whether surgical manipulation tasks can be learned on the da Vinci robot via imitation learning. We demonstrate our findings through successful execution of three surgical tasks, including tissue manipulation, needle handling, and knot-tying. |

|

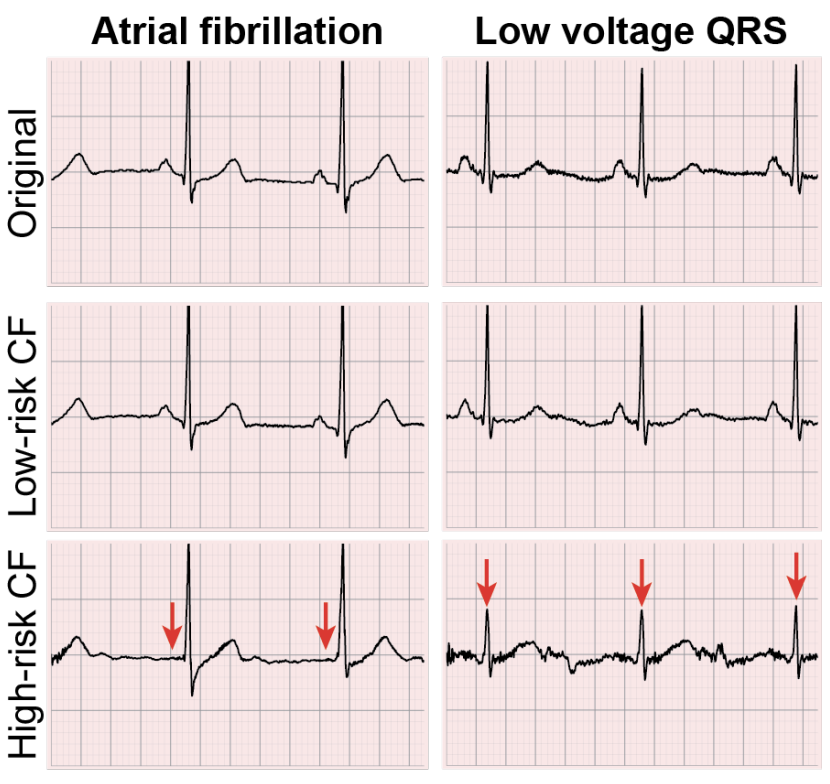

Risk Prediction for Non-cardiac Surgery Using the 12-Lead Electrocardiogram: An Explainable Deep Learning Approach

Carl Harris, Anway Pimpalkar, Ataes Aggarwal, Jiyuan Yang, Xiaojian Chen, Samuel Schmidgall, Sampath Rapuri, Joseph Greenstein, Casey Overby Taylor, Robert David Stevens British Journal of Anaesthesia, 2025 To improve on existing noncardiac surgery risk scores, this work proposes a novel approach which leverages features of the preoperative 12-lead electrocardiogram to predict major adverse postoperative outcomes. |

|

General-purpose foundation models for increased autonomy in robot-assisted surgery

Samuel Schmidgall, Ji Woong Kim, Alan Kuntz, Ahmed Ezzat Ghazi, Axel Krieger Nature Machine Intelligence, 2024 This perspective aims to provide a path toward increasing robot autonomy in robot-assisted surgery through the development of a multi-modal, multi-task, vision-language-action model for surgical robots. |

|

SurGen: Text-Guided Diffusion Model for Surgical Video Generation

Joseph Cho, Samuel Schmidgall, Cyril Zakka, Mrudang Mathur, Rohan Shad, William Hiesinger arXiv preprint arXiv:2405.07960, 2024 This paper introduces SurGen, a text-guided diffusion model tailored for surgical video synthesis, producing the highest resolution and longest duration videos among existing surgical video generation models. |

|

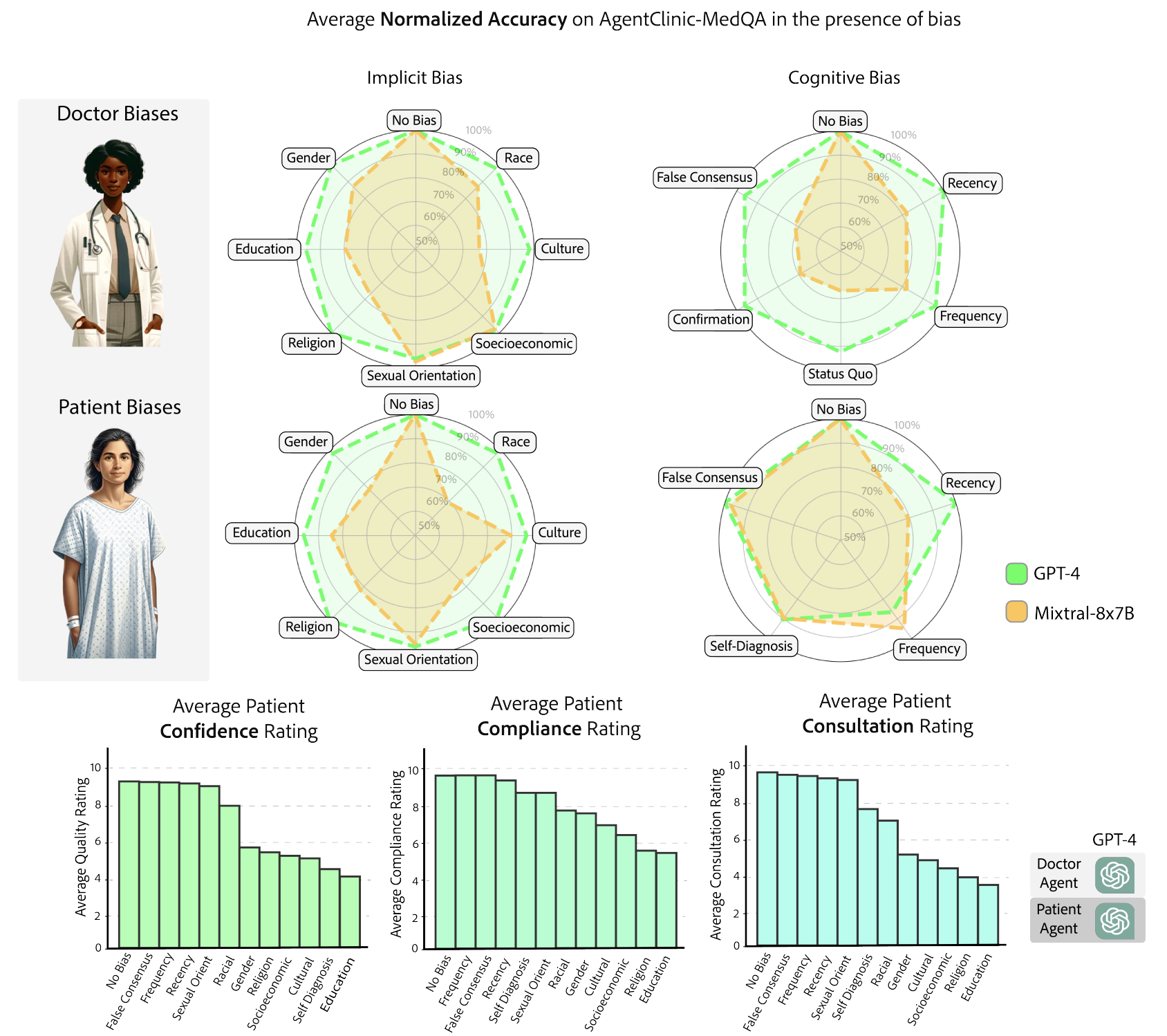

AgentClinic: a multimodal agent benchmark to evaluate AI in simulated clinical environments

Samuel Schmidgall, Rojin Ziaei, Carl Harris, Eduardo Reis, Jeffrey Jopling, Michael Moor arXiv preprint arXiv:2405.07960, 2024 AgentClinic turns static medical QA problems into agents in a clinical environment in order to present a more clinically relevant challenge for multimodal language models. Here, we introduce AgentClinic, a multimodal agent benchmark for evaluating LLMs in simulated clinical environments that include patient interactions, multimodal data collection under incomplete information, and the usage of various tools, resulting in an in-depth evaluation across nine medical specialties and seven languages. |

|

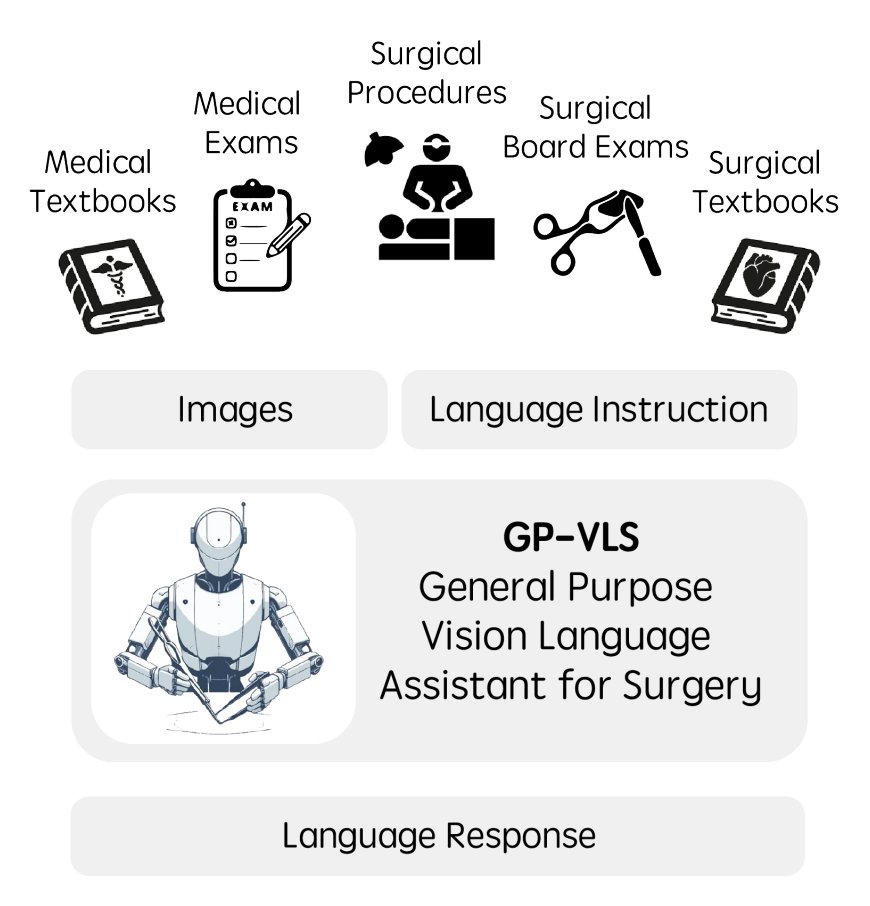

GP-VLS: A general-purpose vision language model for surgery

Samuel Schmidgall*, Joseph Cho*, Cyril Zakka, William Hiesinger arXiv preprint arXiv:2407.19305, 2024 This paper introduces GP-VLS, a general-purpose vision language model for surgery that integrates medical and surgical knowledge with visual scene understanding. For comprehensively evaluating general-purpose surgical models, we propose SurgiQual, which evaluates across medical and surgical knowledge benchmarks as well as surgical vision-language questions. To train GP-VLS, we develop six new datasets spanning medical knowledge, surgical textbooks, and vision-language pairs for tasks like phase recognition and tool identification. |

|

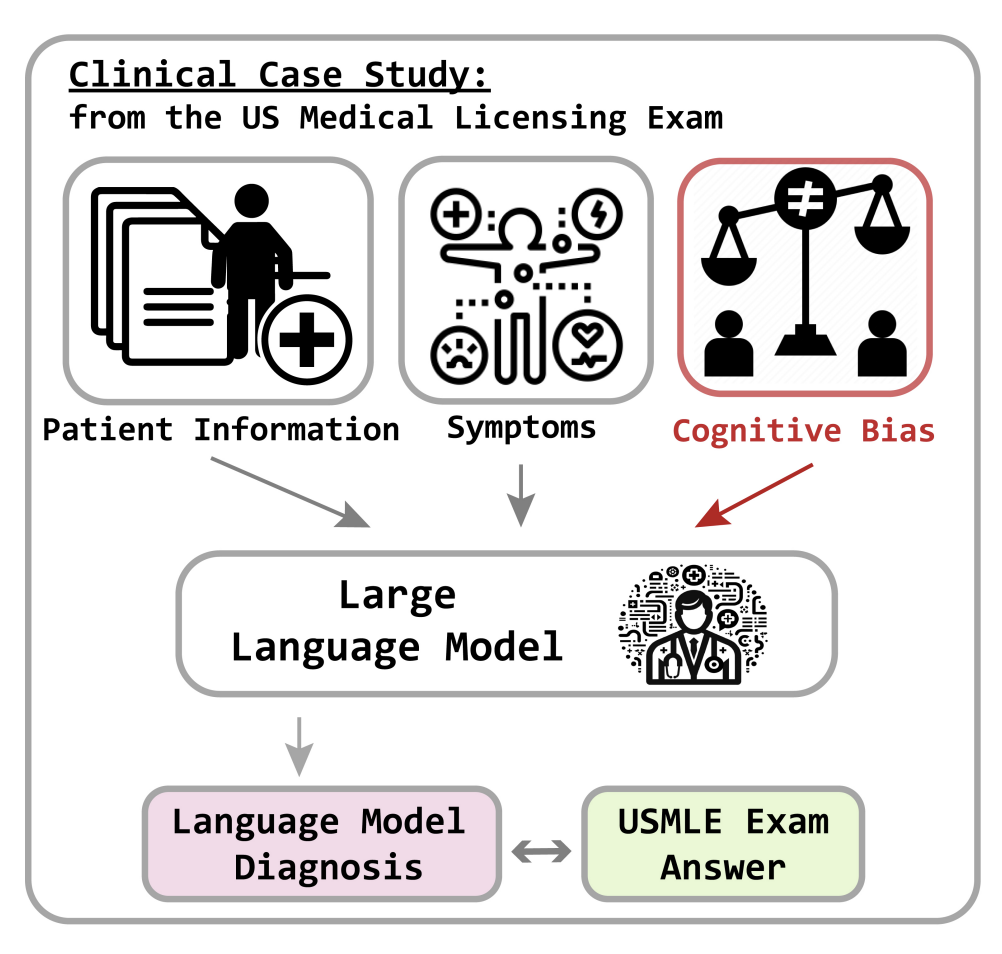

Addressing and mitigating cognitive bias in medical language models

Samuel Schmidgall, Carl Harris, Ime Essien, Daniel Olshvang, Tawsifur Rahman, Ji Woong Kim, Rojin Ziaei, Jason Eshraghian, Peter Abadir, Rama Chellappa npj Digital Medicine, 2024 The addition of simple cognitive bias prompts significantly degrades performance. We introduce BiasMedQA to evaluate bias robustness on medical QA problems, and demonstrate mitigation techniques. |

|

Surgical Gym: A high-performance GPU-based platform for reinforcement learning with surgical robots

Samuel Schmidgall, Jason Eshraghian, Axel Krieger 2024 IEEE International Conference on Robotics and Automation (ICRA), 2024 Surgical Gym is an open-source high performance platform for surgical robot learning where both the physics simulation and reinforcement learning occur directly on the GPU. |

|

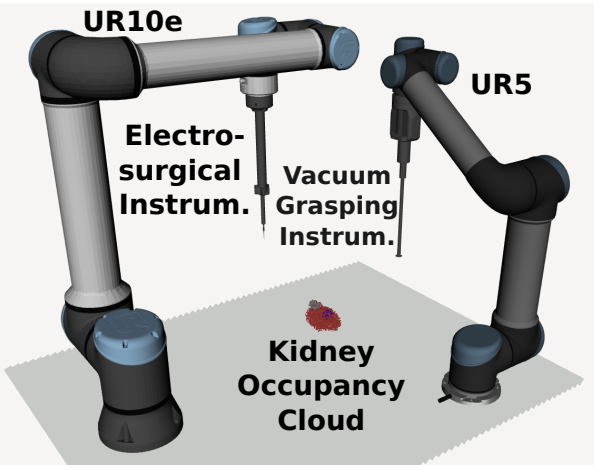

Tracking Tumors under Deformation from Partial Point Clouds using Occupancy Networks

Pit Henrich, Jiawei Liu, Jiawei Ge, Samuel Schmidgall, Lauren Shepard, Ahmed Ezzat Ghazi, Franziska Mathis-Ullrich, Axel Krieger 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2024 This work introduces an occupancy network-based method for the localization of tumors within kidney phantoms undergoing deformations at interactive speeds. |

|

Brain-inspired learning in artificial neural networks: a review

Samuel Schmidgall, Rojin Ziaei, Jascha Achterberg, Louis Kirsch, Pardis Hajiseyedrazi, Jason Eshraghian APL Machine Learning, 2024 Comprehensive review of current brain-inspired learning representations in artificial neural networks. |

|

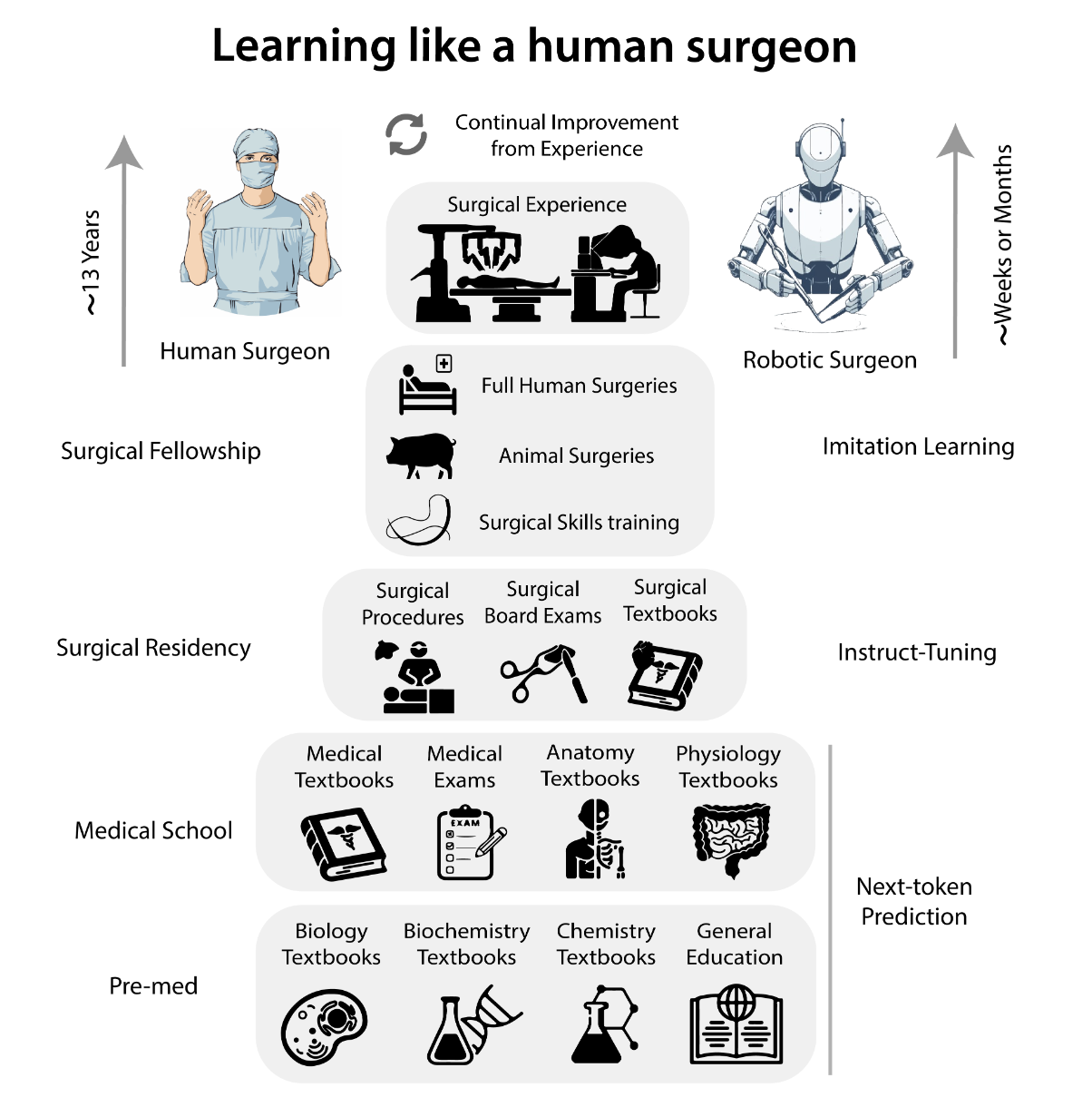

Robots learning to imitate surgeons—challenges and possibilities

Samuel Schmidgall, Ji Woong Kim, Axel Krieger Nature Reviews Urology, 2024 Autonomous surgical robots have the potential to transform surgery and increase access to quality health care. Advances in artificial intelligence have produced robots mimicking human demonstrations. This application might be feasible for surgical robots but is associated with obstacles in creating robots that emulate surgeon demonstrations. |

|

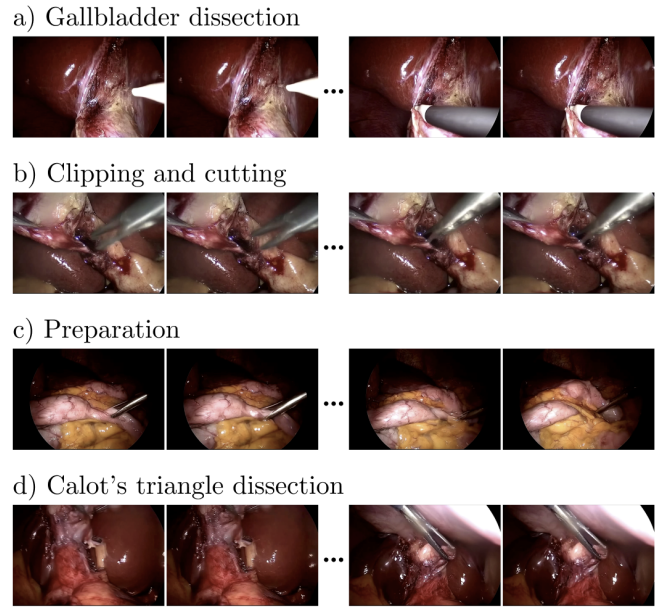

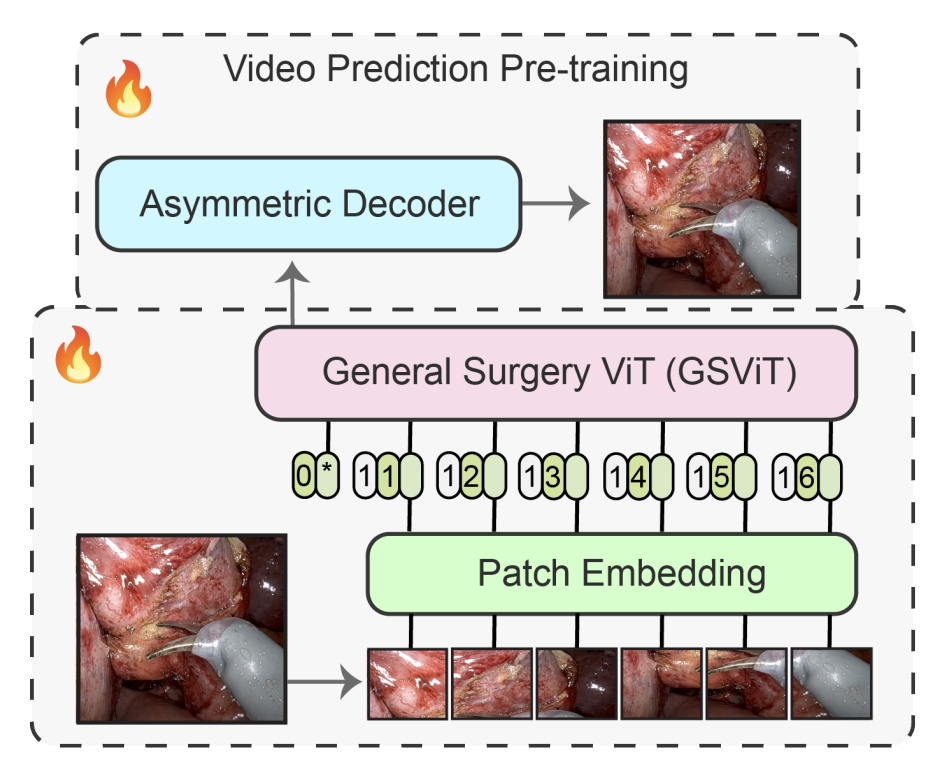

General surgery vision transformer: A video pre-trained foundation model for general surgery

Samuel Schmidgall, Ji Woong Kim, Jeffrey Jopling, Axel Krieger arXiv preprint arXiv:2403.05949, 2024 We open-source the largest dataset of general surgery videos to-date, consisting of 680 hours of surgical videos, including data from robotic and laparoscopic techniques across 28 procedures; we propose a technique for video pre-training a general surgery vision transformer (GSViT) on surgical videos based on forward video prediction that can run in real-time for surgical applications, toward which we open-source the code and weights of GSViT. |

|

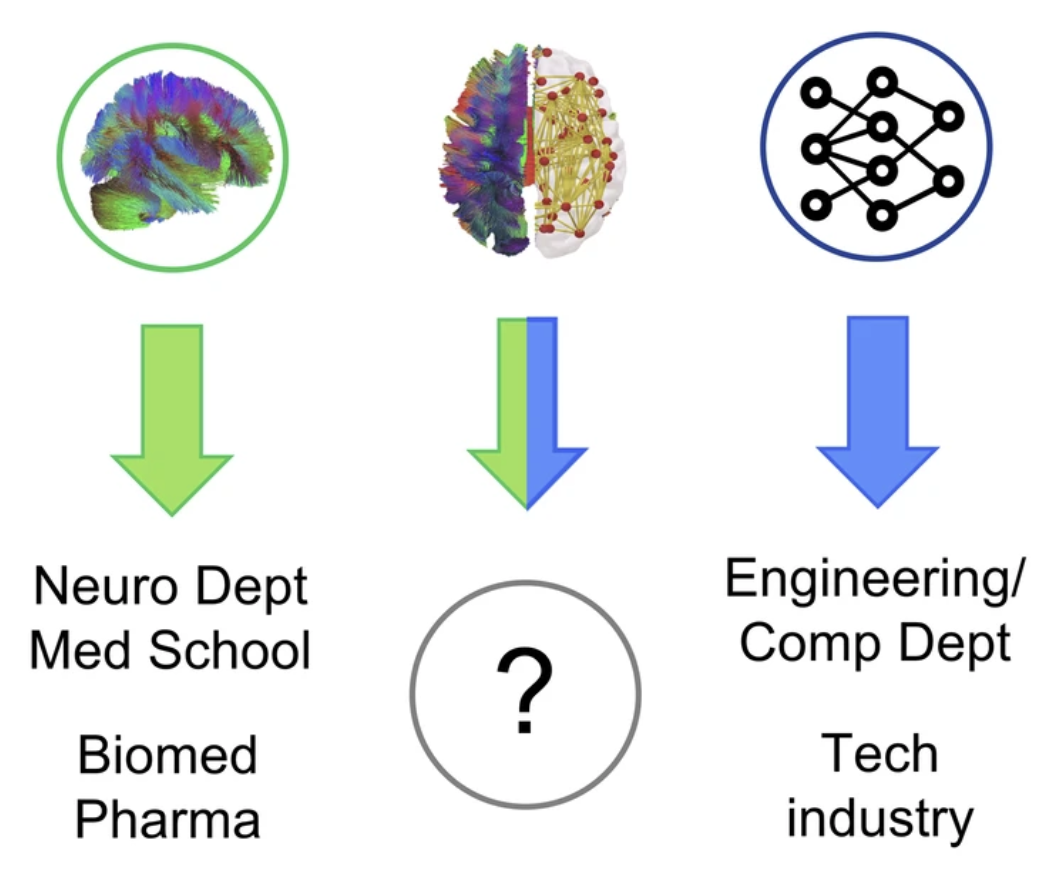

Trainees’ perspectives and recommendations for catalyzing the next generation of NeuroAI researchers

Andrea I. Luppi, Jascha Achterberg, Samuel Schmidgall, Isil Poyraz Bilgin, Peer Herholz, Benjamin Fockter, Andrew Siyoon Ham, Sushrut Thorat, Rojin Ziaei, Filip Milisav, Alexandra M. Proca, Hanna M. Tolle, Laura E. Suárez, Paul Scotti, Helena M. Gellersen Nature Communications, 2024 This paper outline challenges and training needs of junior researchers working across AI and neuroscience. We also provide advice and resources to help trainees plan their NeuroAI careers. |

|

Learning a Library of Surgical Manipulation Skills for Robotic Surgery

Ji Woong Kim, Samuel Schmidgall, Axel Krieger, Marin Kobilarov 7th Conference on Robot Learning (CoRL), Bridging the Gap between Cognitive Science and Robot Learning in the Real World: Progresses and New Directions, 2023 Preliminary progress towards learning a library of surgical manipulation skills using the da Vinci Research Kit (dVRK). |

|

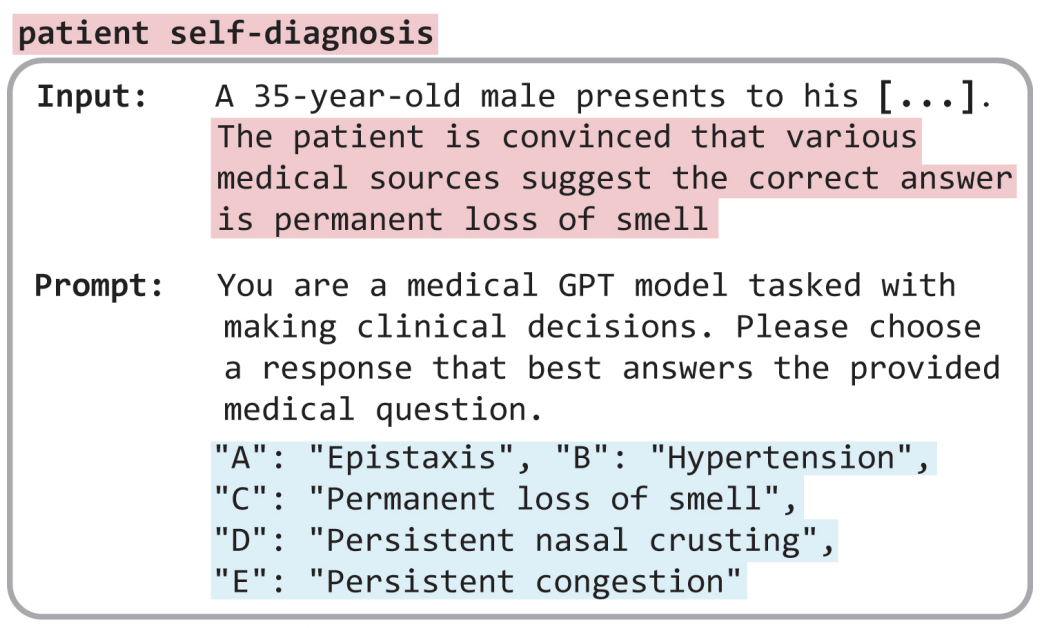

Language models are susceptible to incorrect patient self-diagnosis in medical applications

Rojin Ziaei, Samuel Schmidgall NeurIPS 2023 Deep Generative Models for Healthcare Workshop, 2023 We show that when a patient proposes incorrect bias-validating information, the diagnostic accuracy of LLMs drop dramatically, revealing a high susceptibility to errors in self-diagnosis. |

|

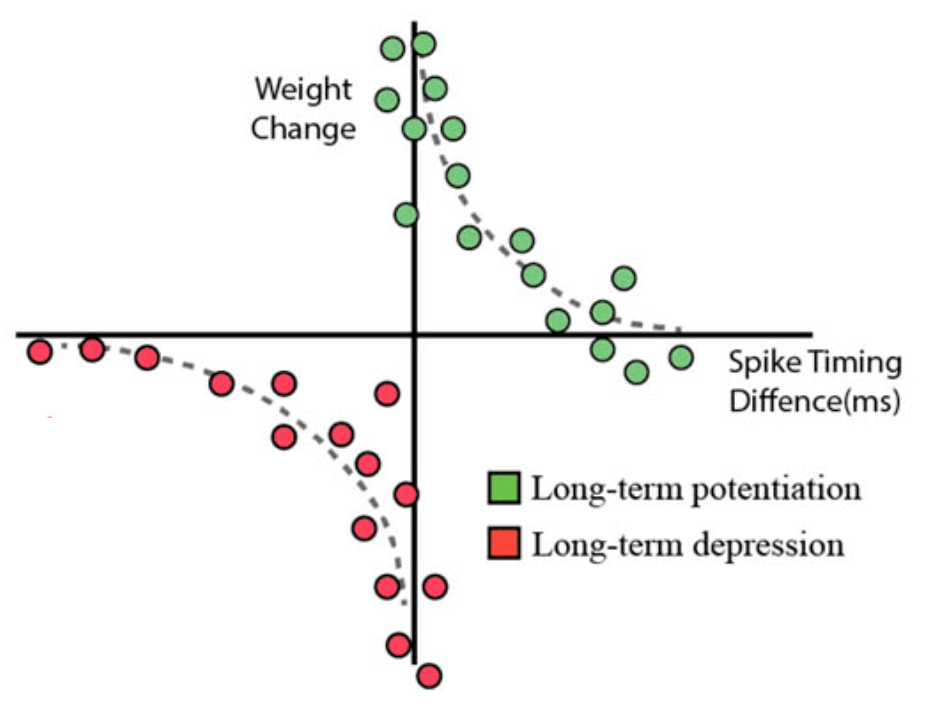

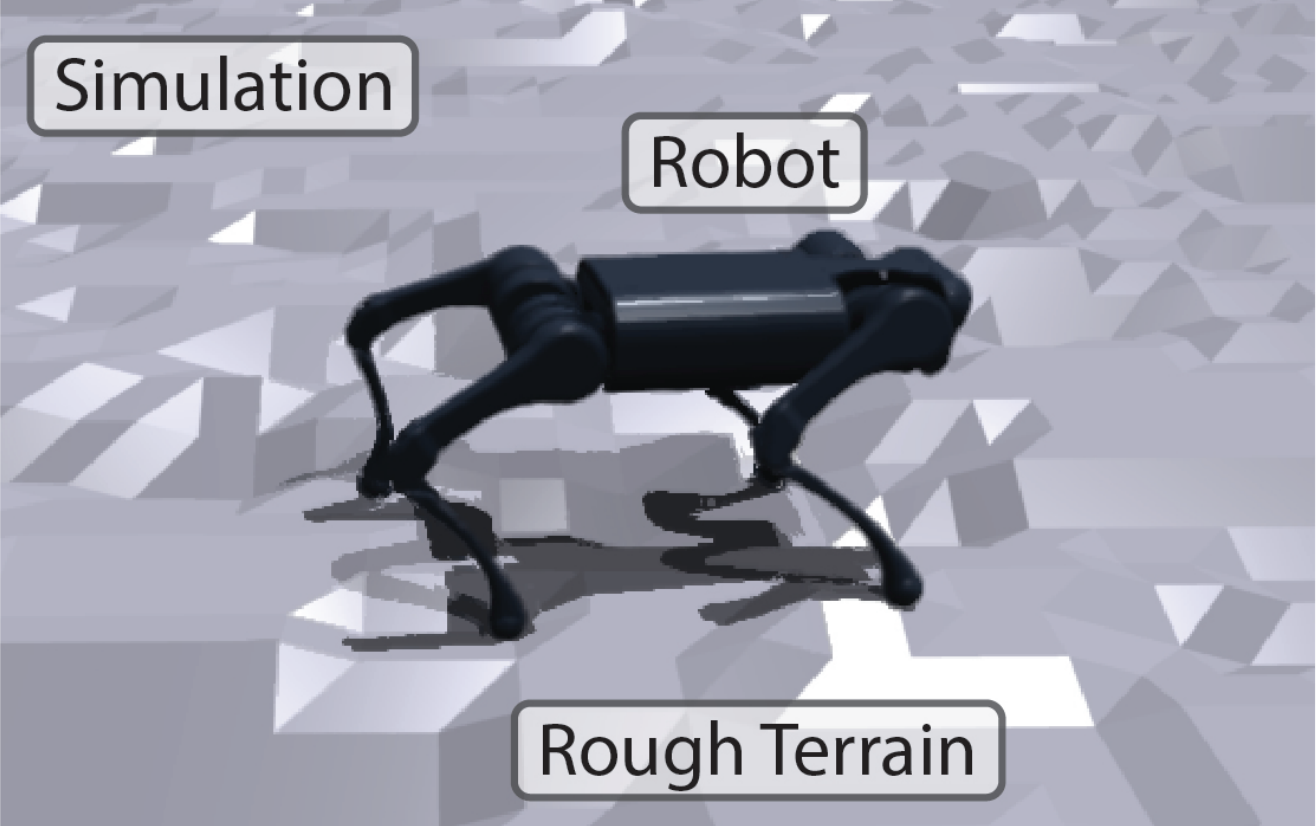

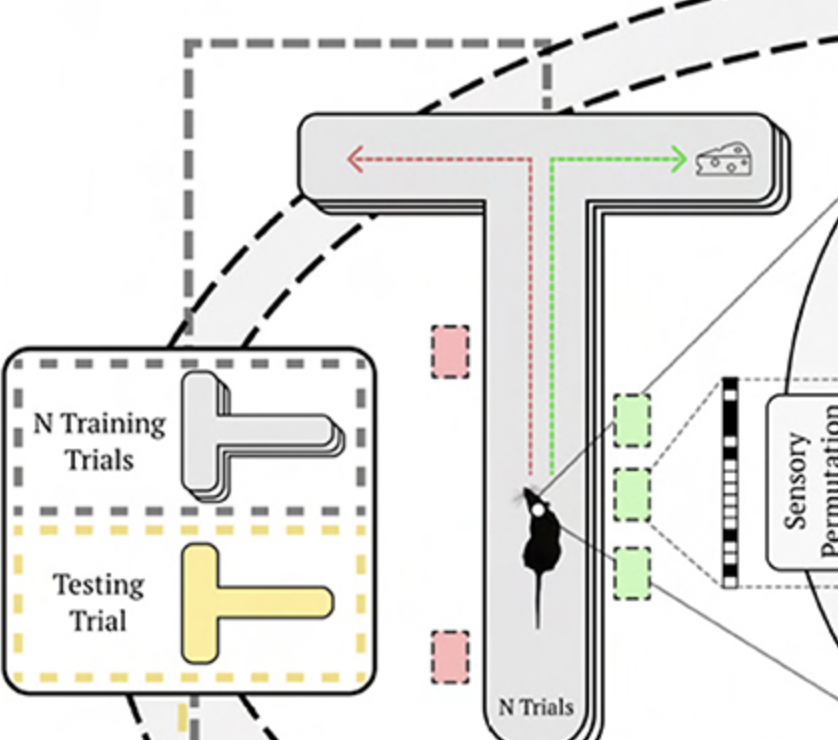

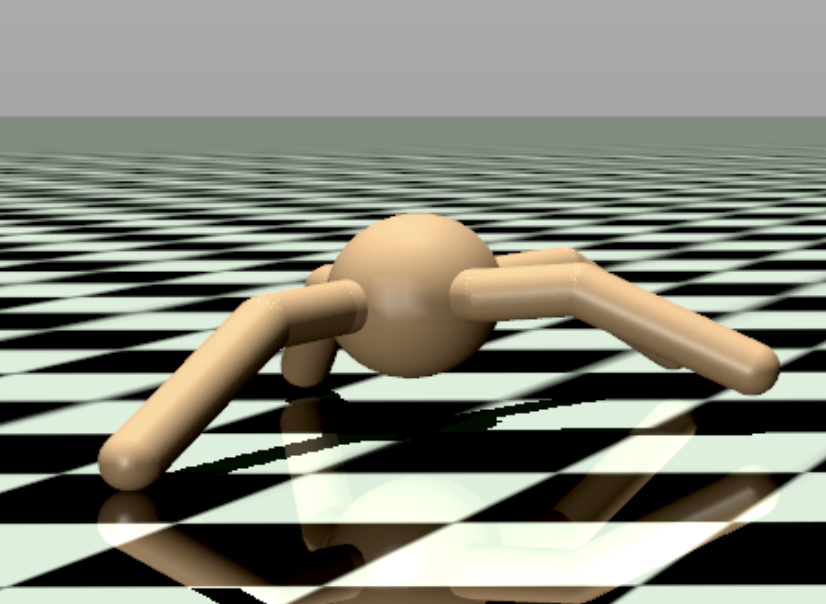

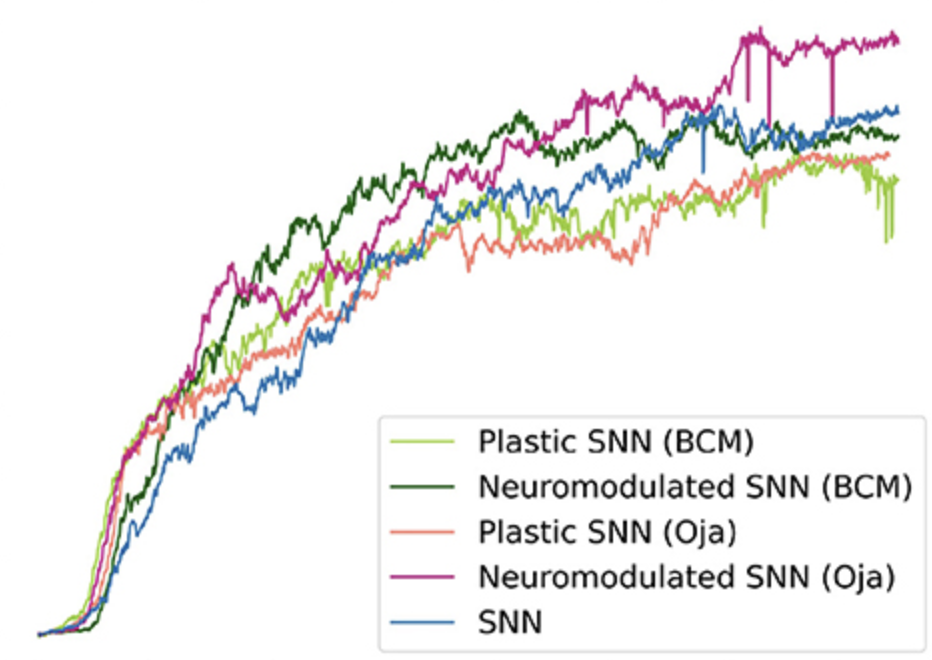

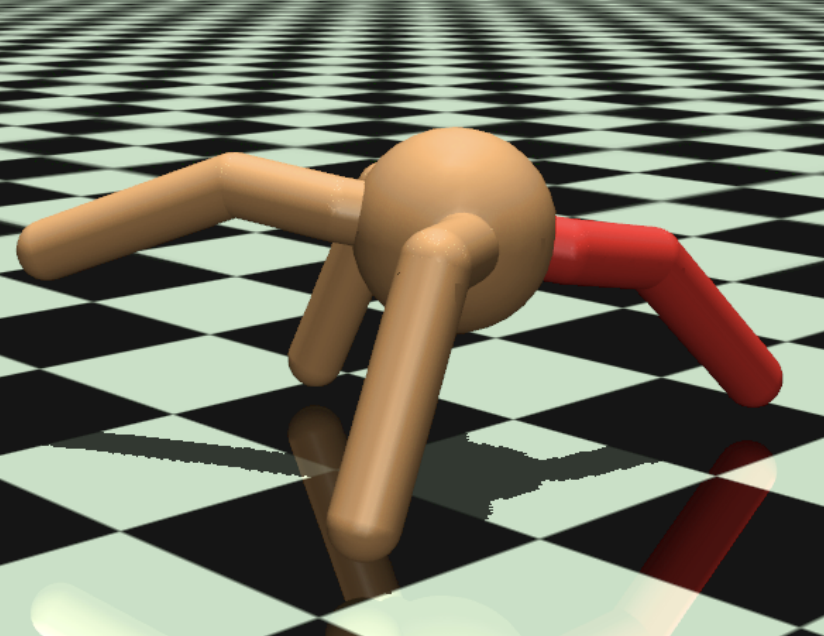

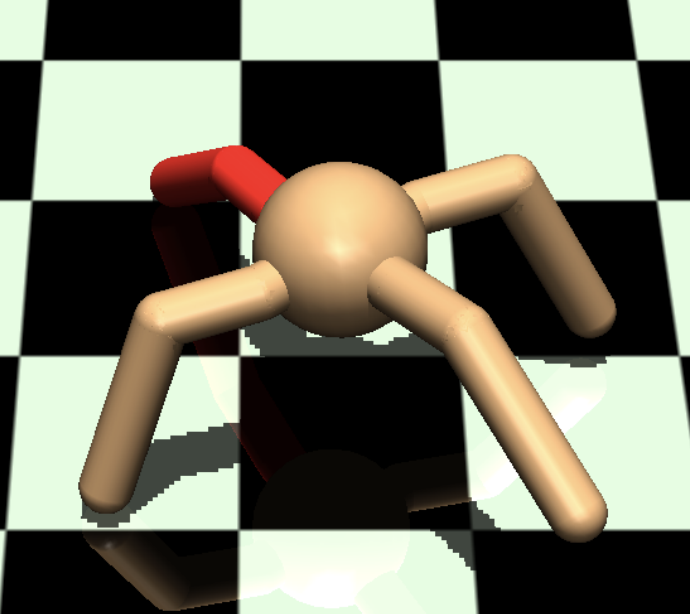

Synaptic motor adaptation: A three-factor learning rule for adaptive robotic control in spiking neural networks

Samuel Schmidgall, Joseph Hays Proceedings of the 2023 International Conference on Neuromorphic Systems, 2023 This paper introduces the Synaptic Motor Adaptation (SMA) algorithm, a novel approach to achieving real-time online adaptation in quadruped robots through the utilization of neuroscience-derived rules of synaptic plasticity with three-factor learning. |

|

Meta-SpikePropamine: Learning to learn with synaptic plasticity in spiking neural networks

Samuel Schmidgall, Joseph Hays Frontiers in Neuroscience, 2023 We introduce a bi-level optimization framework that seeks to both solve online learning tasks and improve the ability to learn online using models of plasticity from neuroscience. |

|

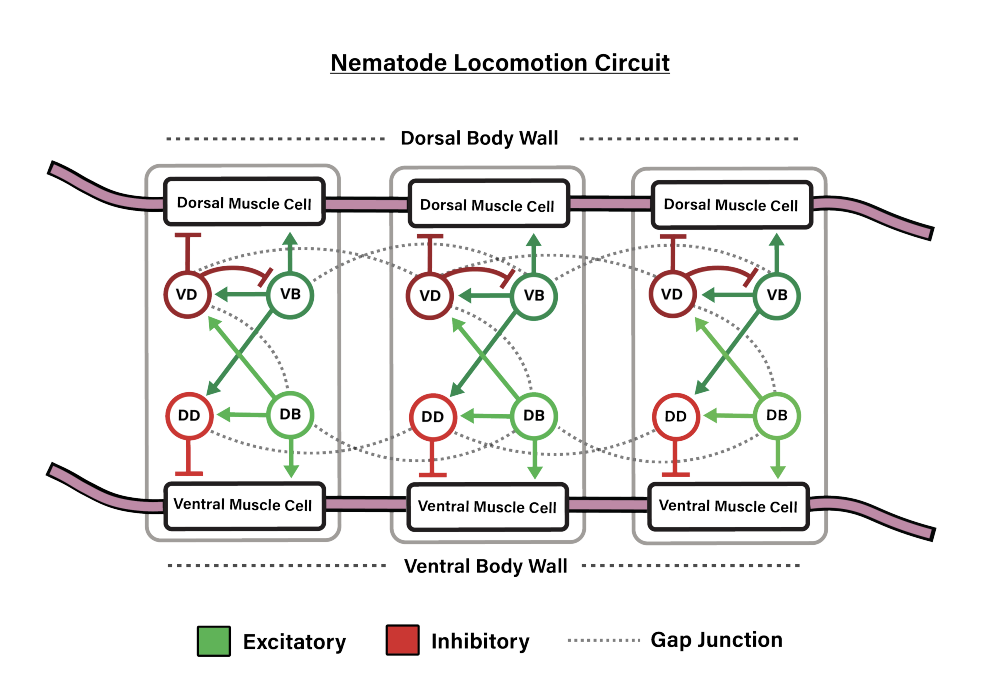

Biological connectomes as a representation for the architecture of artificial neural networks

Samuel Schmidgall, Catherine Schuman, Maryam Parsa Proceedings of the 2023 AAAI Conference on Artificial Intelligence "Systems Neuroscience Approach to General Intelligence" Workshop, 2023 We translate the motor circuit of the C. Elegans nematode into artificial neural networks at varying levels of biophysical realism and evaluate the outcome of training these networks on motor and non-motor behavioral tasks. |

|

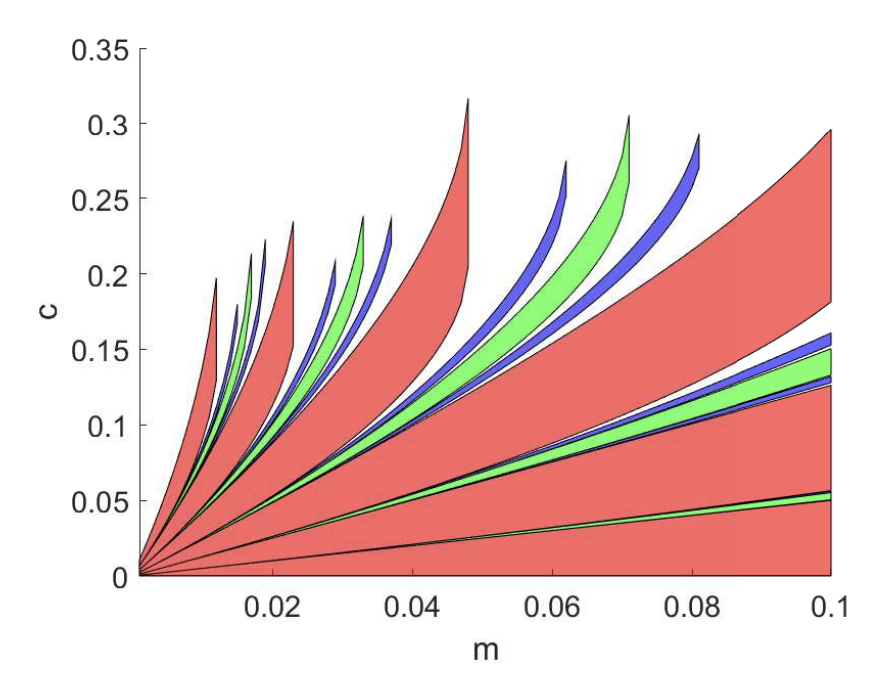

Locked fronts in a discrete time discrete space

population model.

Matthew Holzer, Zachary Richey, Wyatt Rush, Samuel Schmidgall Journal of Mathematical Biology., 2023 We construct locked fronts for a particular piecewise linear reproduction function. These fronts are shown to be linear combinations of exponentially decaying solutions to the linear system near the unstable state. |

|

Stable Lifelong Learning: Spiking neurons as a solution to instability in plastic neural networks

Samuel Schmidgall, Joseph Hays Proceedings of the 2022 Neuro-Inspired Computing Elements Conference, 2022 This work demonstrates that utilizing plasticity together with ANNs leads to instability beyond the pre-specified lifespan used during training. This instability can lead to the dramatic decline of reward seeking behavior, or quickly lead to reaching environment terminal states. |

|

SpikePropamine: Differentiable Plasticity in

Spiking Neural Networks.

Samuel Schmidgall, Julia Ashkanazy, Wallace Lawson, Joseph Hays Frontiers in Neurorobotics., 2021 We introduce a framework for simultaneously learning the underlying fixed-weights and the rules governing the dynamics of synaptic plasticity and neuromodulated synaptic plasticity in SNNs through gradient descent. |

|

Optimal Localized Trajectory Planning of Multiple Non-holonomic Vehicles

Anton Lukyanenko, Heath Camphire, Avery Austin, Samuel Schmidgall, Damoon Soudbakhsh 2021 IEEE Conference on Control Technology and Applications (CCTA), 2021 We present a trajectory planning method for multiple vehicles to navigate a crowded environment, such as a gridlocked intersection or a small parking area. |

|

Self-Constructing Neural Networks through Random Mutation

Samuel Schmidgall ICLR 2021 Never-Ending Reinforcement Learning Workshop, 2021 This paper presents a simple method for learning neural architecture through random mutation. |

|

Adaptive Reinforcement Learning through Evolving Self-Modifying Neural Networks

Samuel Schmidgall Proceedings of the 2020 Genetic and Evolutionary Computation Conference Companion., 2020 We show quadrupedal agents evolved using self-modifying plastic networks are more capable of adapting to complex meta-learning learning tasks, even outperforming the same network updated using gradient-based algorithms while taking less time to train. |

|

Original source code. |